| Simultaneous localization and mapping with limited sensing |

Kris Beevers

(beevek@gmail.com)

Rensselaer Polytechnic Institute Algorithmic Robotics Laboratory |

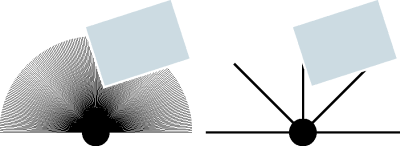

Our research aims to develop algorithms for SLAM on robots with much more limited sensing, such as small arrays of infrared rangefinders. Such sensors cost orders of magnitude less than laser rangefinders and consume less power and computation. However, they give only low-resolution, short-range feedback about the environment and thus are difficult to use for mapping.

(This is joint work with Wes Huang.)

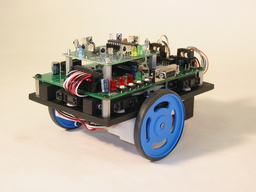

Mapping with an inexpensive robot such as the ARL Ratbot (right) is

challenging for several reasons. Most importantly, information

about the environment available via the robot's sensors is much more

restricted than for robots with scanning laser rangefinders. In

particular, it is generally impossible to extract distinctive

features of the environment (e.g., lines representing walls or

points representing corners) from a sensor scan taken from a single

pose. The problem is essentially one of partial

observability; similar issues arise with other types of sensors

as well, such as sonar sensors or monocular

cameras.

Mapping with an inexpensive robot such as the ARL Ratbot (right) is

challenging for several reasons. Most importantly, information

about the environment available via the robot's sensors is much more

restricted than for robots with scanning laser rangefinders. In

particular, it is generally impossible to extract distinctive

features of the environment (e.g., lines representing walls or

points representing corners) from a sensor scan taken from a single

pose. The problem is essentially one of partial

observability; similar issues arise with other types of sensors

as well, such as sonar sensors or monocular

cameras.

In order to do mapping with "sparse" (low spatial resolution) range-bearing sensors, it is necessary to extract features using scan data from several robot poses. This in turn leads to increased uncertainty in the map and pose estimates. Our research addresses strategies for SLAM with multi-pose data and ways to improve estimation accuracy in the face of high uncertainty. |

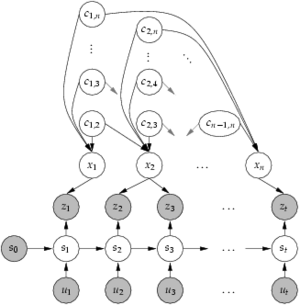

RBPF SLAM with sparse sensing

Algorithms for SLAM based on Rao-Blackwellized particle filtering (RBPF), in which the robot's trajectory estimate is represented by a set of samples, or particles, offer several advantages over other approaches such as extended Kalman filter (EKF) SLAM. Thus, while others have studied the partial observability problem in the context of EKF, our focus has been on RBPF algorithms.

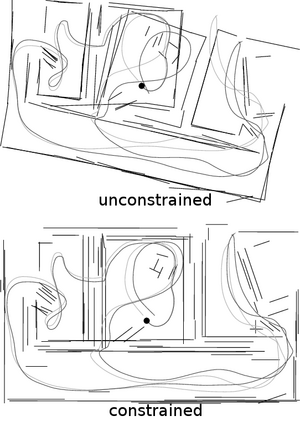

In order to extract features from sparse range-bearing data, we group consecutive individual scans from multiple robot poses into "multiscans." After accumulating several scans in a multiscan, feature extraction is then performed as though the data came from a single scan, and a standard SLAM update is applied.

Because multiscans contain data taken from multiple robot poses, a straightforward implementation of our approach requires feature extraction to be performed separately for each particle in the filter (particles have unique trajectories). This is a costly proposition. An alternative that we have developed is to extract features using only the expected (mean) trajectory estimate, and then transform the features for each particle. This approach yields sufficient accuracy for SLAM since multiscans are taken over short trajectories. If more accuracy is required, particles can be grouped into "strata," and features can be extracted once for each strata and then transformed.

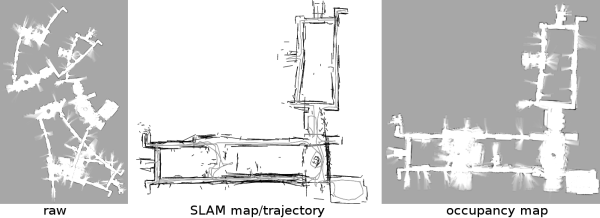

We have implemented our approach and applied it to real-world data from Radish, a SLAM dataset repository. Since our focus is on limited sensing, and most data on Radish is from laser rangefinders, we simply discard readings from all but five sensor orientations before mapping. Our algorithm has successfully built maps like the one shown above, of the Gates Building at Stanford University.

Publications

| Kristopher R. Beevers and Wesley H. Huang. SLAM with sparse sensing. 2006 IEEE International Conference on Robotics and Automation (ICRA 2006), pages 2285-2290, Orlando, May 2006. |

|

Ongoing workOur research on SLAM with limited sensing is ongoing and focuses on several areas. In particular, we are:

|

A key component of our approach is a recognition that the typical

RBPF SLAM model assumes the environment is unstructured,

i.e., that landmarks are randomly and independently distributed in

the workspace. In fact, this is rarely the case, as in indoor

environments where landmarks are placed methodically by an

architect. Thus, some correlation exists between landmarks,

breaking the traditional RBPF model. However, prior knowledge of

this correlation can be exploited to infer the relationships between

landmarks.

A key component of our approach is a recognition that the typical

RBPF SLAM model assumes the environment is unstructured,

i.e., that landmarks are randomly and independently distributed in

the workspace. In fact, this is rarely the case, as in indoor

environments where landmarks are placed methodically by an

architect. Thus, some correlation exists between landmarks,

breaking the traditional RBPF model. However, prior knowledge of

this correlation can be exploited to infer the relationships between

landmarks.